Gradient Descent

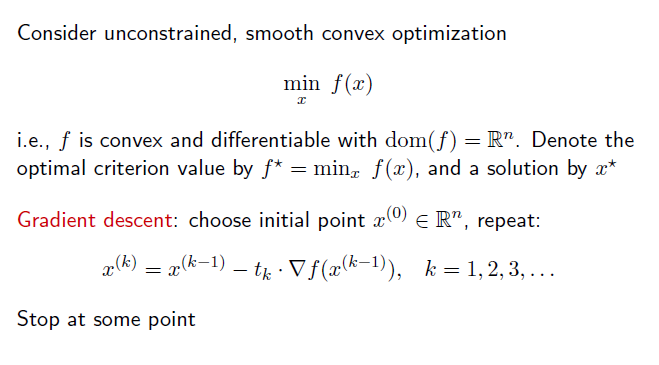

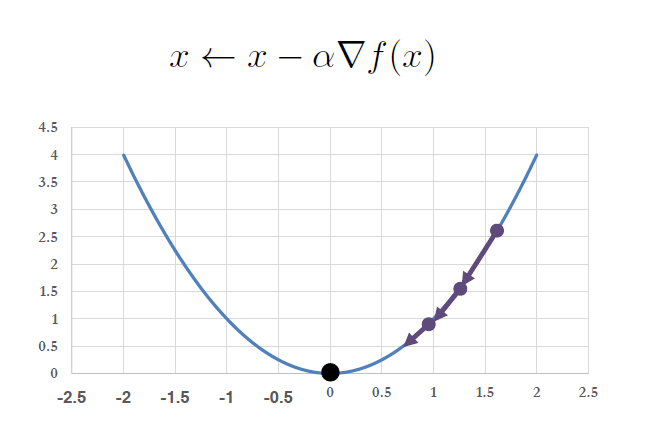

Gradient descent is an algorithm to iteratively find the parameters, say , of a function, let’s call it , where is at its lowest/minimum. It would seem obvious that we use Calculus: we take the gradient and find the minimum. Well, we use gradient descent when we can’t solve for where . It is mostly used to find the optimal parameters of a machine learning model (that minimizes some loss function). Here is the formal definitiion of gradient descent, except you can generalize further and consider a vector.

Formal Definitiion

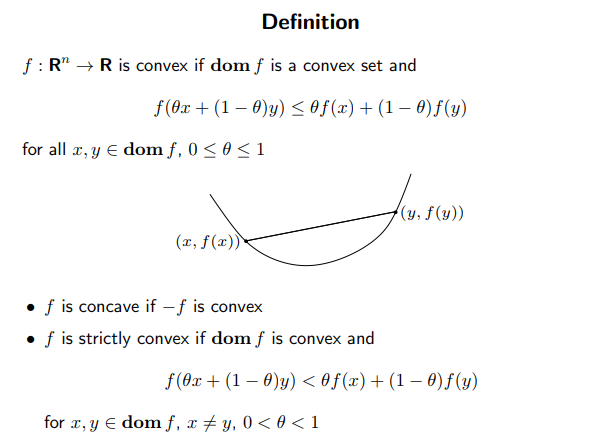

Why does need to be convex and differentiable? Because for convex functions, every local minimum is a global minimum. With convex functions, we know that we are definitely descending towards the global minimum, not just a local minimum.

The definition of a convex function is not very intuitive. Your intuitive, not rigorous mathematical definition, is enough in this write-up.

Toy Example

Josh Starmer gives a good concrete example of how to use gradient descent to fit a linear regression model to a toy dataset.